The future of TV: 10 tech steps to the ultimate TV experience

From 4K to HDR to 8K and beyond: the road to TV-viewing perfection is paved with new technologies. And acronyms. Lots and lots of acronyms.

The first high-definition broadcasts in the UK began over ten years ago, when Sky began broadcasting HD channels in May 2006. The TV tech landscape has advanced massively since then, with 4K Ultra HD – four times the resolution of HD – now becoming a mainstream and affordable option.

Thanks to Netflix, Amazon Prime Video, Sky and BT Sport making 4K content available - not forgetting that there are over 100 4K Blu-ray discs now on sale - you can now watch a whole host of 4K content in a variety of ways. And that’s not all. 4K has kick-started a slew of innovations that are already making their way to your TV. So, if you want the best possible video (and audio) quality, brace yourself for some changes.

From HDR to WCG, 4K to 8K, Yoeri Geutskens, the font of knowledge behind the @UHD4K Twitter account, looks at the key television technology that will improve your viewing experience over the coming months and years...

MORE: How to watch 4K content online and on TV

4K Ultra HD resolution

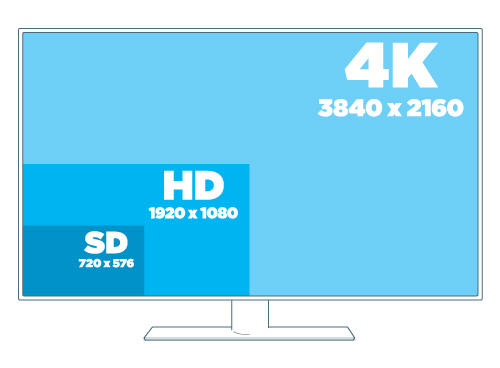

Around ten years ago, the TV industry started making the switch from standard definition (SD) TV to high definition (HD) TV. This was all about increasing resolution: from 480 (USA and Japan) and 576 (UK and Europe), to 720 or 1080 lines. With this came the move from interlaced to progressive video, which gave us an ultimate resolution for HD TV of 1920 × 1080p.

But it didn't stop there. Over the last few years, we have seen the steady rise of 4K Ultra HD, with a resolution of 3840 × 2160. This delivers twice the horizontal and vertical lines of HD, and four times as many pixels.

Ultra HD TVs first arrived on the market in 2013, but they have since gained adoption quicker than HD TV sets did a decade earlier. This is thanks in part to consumers' habit of buying increasingly larger screens, many of which are now 4K.

The latest hi-fi, home cinema and tech news, reviews, buying advice and deals, direct to your inbox.

The premium price of the early 4K sets has been reduced too, making a 4K set much more affordable. You can now get a 40in 4K TV for just £500.

If you're prepared to spend over £1000 on a new TV, you should choose one with 4K (and a bigger screen size).

MORE: 4K Ultra HD TV explained

MORE: Best TVs of 2017

High Dynamic Range (HDR)

The hottest buzzword in TV technology (apart from 4K) is High Dynamic Range (HDR). The HDR technology in your TV aims to deliver better pictures with brighter whites, darker blacks and a wider range of colours.

Confusingly for consumers, there are various technical solutions for implementing HDR, which means different versions of HDR on the market. The most prominent ones are HDR10, Dolby Vision, and the broadcast-standard Hybrid Log Gamma (HLG). Samsung is even developing its own version called HDR10+.

Expect the standard HDR10 to come hand-in-hand with most new 4K TVs, as well as most 4K Blu-rays.

Dolby Vision HDR is currently supported only on LG's 2017 OLED TVs, and by some streaming services such as Netflix. However, it's also slowly making its way on to select 4K Blu-rays.

Meanwhile, Samsung's HDR10+ is being backed by Amazon Video, while live TV broadcasters and equipment makers favour HLG, which is more compatible with existing equipment and workflows.

It's a bit of a minefield, but there's no doubt that HDR (in its many forms) will continue to be one of the main talking points in the next few years.

MORE: HDR TV: What is it? How can you get it?

MORE: HDR10 vs Dolby Vision: which is better?

Connectivity and HDMI

HDMI remains the standard connection for sending HD and 4K video. HDMI 1.4a was good enough for HD and even 4K, as long as the frame rate was no higher than 30fps. HDMI 2.0, first introduced in 2014, raised the capability to 4K at 60fps. It also enables a newer version of HDMI’s content protection technology, HDCP 2.2.

To play 4K content from 4K Blu-rays, set-top digital boxes and games consoles, 4K TVs and AV receivers need to be HDCP 2.2-compliant.

Encoding and decoding standards

Ultra HD has introduced new compression standards. Although Ultra HD encoding is possible with MPEG-4 AVC (also known as H.264), a more advanced and efficient technology called HEVC or H.265 has become the new standard.

This matters because 4K requires a lot more bits, so better compression helps broadcasters, satellite operators and cable operators make the best use of limited bandwidth.

Netflix and Amazon Video use H.265 when streaming 4K content, so your 4K TV also needs to support the standard. It's worth noting that first-gen 4K TVs used H.264, which won't be compatible with today's 4K content. Conversely, YouTube uses the royalty-free VP9 compression.

Decoding chips for 4K TVs and set-top boxes, as well as encoding hardware and software for broadcasters, should come as standard now, so expect all future UHD transmissions (and many HD ones) to use this new HEVC standard.

MORE: FIFA confirms entire 2018 World Cup will be available in 4K UHD

Immersive audio: Atmos and beyond

The best quality level for broadcast surround sound is currently 7.1-channel Dolby Digital Plus. Although higher channel counts are possible for a more immersive experience, Dolby and DTS have taken different approaches.

Dolby Atmos for the home allows for up to nine speakers at the base level, up to four height channels and an LFE channel for a subwoofer. Dolby Atmos is already available on selected titles on streaming video platforms Netflix and Vudu, and on a few dozen 4K and Blu-ray discs. Sky even broadcasts its Ultra HD Premier League football in Dolby Atmos too.

DTS:X, which offers three-dimensional sound without needing extra speakers, is slowly gaining traction, with a handful of new Blu-rays supporting the format.

They aren't restricted to the new Ultra HD Blu-ray standard, but will work with the ‘old’ Blu-ray standard as well. The next step for this next-gen audio will be more content and compatibility with broadcast TV.

Meanwhile, LG's 2017 OLED TVs will soon receive an update to fully support Dolby TrueHD, a lossless surround-sound codec that supports eight channels of 24-bit/96kHz (or six channels of 24-bit/192kHz) commonly found on Blu-rays.

MORE: Dolby Atmos: What is it? How can you get it?

MORE: DTS:X: What is it? How can you get it?

Wide Colour Gamut (WCG)

Wide Colour Gamut means the adoption of a larger colour space than the one specified for Full HD TV, which was known as BT.709 (or Rec.709).

The new standard colour space for 4K resolutions is called BT.2020 (or Rec.2020). It offers a much wider range of colours and greater saturation than the Rec.709 standard.

BT.2020 is also one of the requirements needed for a 4K HDR product to be certified as UHD Premium, so it’s a promise of a more realistic colour representation.

HDR requires Wide Colour Gamut, although the use of WCG does not automatically imply HDR. All 4K TVs currently on the market that don’t support HDR should still support WCG, even though it’s barely mentioned. The aim is to give ample room for future picture improvement, as the wide colour space spec extends far beyond what current screens can handle (it can apply to 8K resolutions, for instance).

When it comes to creating the perfect picture in the future, it will no doubt play a crucial part.

MORE: UHD Premium: What are the specs? Which TVs support it?

High Frame Rate (HFR)

High frame rates are, essentially, anything higher than the traditional 24 frames per second (fps) used in films, or the 25/50fps (in Europe) and 30/60fps (North America) used for broadcasting standard, high definition and 4K content on TV.

In the drive to improve picture quality led by 4K resolution, there is scope to go even further, up to 100 or 120fps. Higher frame rates should reduce motion tracking and offer a smoother picture quality as a result.

Ang Lee’s Billy Lynn’s Long Halftime Walk (2016) is one such example. It was shot in native 4K at the high frame rate of 120fps, however, you won’t be able to see the film’s full spec in your home, as 4K Blu-ray discs can only hold native 4K content at 60fps. Meanwhile, your 4K TV will only support frame rates up to 50 or 60fps.

With 4K and HDR established for streaming, discs and broadcast, could the next step towards improving picture quality lie in enabling higher frame rates? Watch this space.

MORE: HFR TV: What is it? How can you watch it?

8K resolution

Even with UHD, HDR, WCG and HFR, the pursuit of better picture quality hasn’t come to an end. The Japanese broadcast industry is pushing ahead for a resolution of 7680 × 4320p, which it has called 8K. Others refer to this standard as Ultra HD 2 (UHD-2) as opposed to the existing UHD-1.

Moving to UHD-2 would have huge repercussions throughout the entire production and delivery chain, so few outside of Japan are prepared to even talk about it yet. LG, Samsung, Sharp and several Chinese manufacturers all showcased 8K displays at CES 2015 in Las Vegas, but apart from being technology statements, the only practical use for these products in the foreseeable future is likely to be digital signage.

However, at IFA 2017 things took a surprise turn. Sharp boldly claimed that a 70in consumer-ready 8K TV will be available to buy in 2018. We shall see.

Korea is aiming for the 2018 Winter Olympics in PyeongChang to be shown in 8K, while Japan is hoping the 2020 Tokyo Olympics will be broadcast in 8K.

An outside bet could be the return of 3D TV (remember that?). 8K allows for autostereoscopic 3D, which means the possibility of glasses-free 3D with an effective resolution of 4K.

MORE: 8K TV - everything you need to know

MORE: Japan plans for 8K TV broadcasts

Modular displays

The switch from analogue, 4:3, SD TV sets to digital, 16:9, HD TV sets was driven by the flat-panel form that plasma and LCD displays enabled - in stark contrast to their CRT predecessors.

Could there be another such transition in the future to drive adoption of 8K 120fps displays? Certainly, if TV dimensions keep growing, the sets could become too large to handle.

One format that manufacturers such as Sony, Samsung and Google have started working on is modular screens. These potentially giant screens would be made from compact, stackable building blocks.

If this technology becomes available commercially at affordable prices, it could allow for wall-to-wall, floor-to-ceiling immersive displays in your living room. Less TV screen, more TV wall.

Flexible and transparent displays

Who says a TV has to stay fixed on a wall? Super-thin OLED panels have paved the way for more creative display tech, such as flexible screens, roll-up screens and transparent screens.

LG Display has invested £580m into flexible OLED displays, which are being produced primarily for advertising signage.

We’ve already seen bendy OLED screens from LG: its ‘Wallpaper’ TV (the 77in W7 OLED) is just 5mm thick and wobbles a fair bit. LG’s latest prototype goes one step further - it's a completely transparent screen you can roll into a tube.

At IFA 2017, Panasonic showed off a stunning 4K OLED transparent screen that uses an ‘intelligent glass’ display to seamlessly blend into your home's decor.

We imagine it'll be a while before we see screens like this in our homes, but in the meantime, we can marvel at how far the tech has come.

MORE: What is OLED? The tech, the benefits.

Of course, we can only hope that all this technology will trickle down to consumer TVs eventually.

In the case of 4K and HDR – it already has. It took its time, but with 4K HDR content now widely available through physical discs, online streaming and TV broadcasts, now is the perfect time to be looking into getting the latest TV technology in your home.

There’s plenty of innovation – from 4K to OLED to HDR – readily available, but if there's one thing we know about consumer technology, it's that there's always something new on the horizon.

What Hi-Fi?, founded in 1976, is the world's leading independent guide to buying and owning hi-fi and home entertainment products. Our comprehensive tests help you buy the very best for your money, with our advice sections giving you step-by-step information on how to get even more from your music and movies. Everything is tested by our dedicated team of in-house reviewers in our custom-built test rooms in London, Reading and Bath. Our coveted five-star rating and Awards are recognised all over the world as the ultimate seal of approval, so you can buy with absolute confidence.