Any HDMI connection can now be labelled as HDMI 2.1, and that's not okay

The HDMI Licensing Administrator has decreed that all HDMI sockets are now 2.1, even if they're not

So apparently there are no longer HDMI 2.0 ports and HDMI 2.1 ports. According to a recent proclamation by They Who Decide These Things, the HDMI Licensing Administrator, now there are only HDMI 2.1 ports.

Even if you haven’t a clue what we’re talking about here, you might be thinking this sounds like a good thing. Why confuse the marketplace by having two generations of HDMI port in play when one will do? Unfortunately, though, the decision to state that all HDMI ports on new equipment can call themselves HDMI 2.1 is anything but a good thing, since it doesn’t actually mean that all those HDMI ports really are HDMI 2.1 ports in terms of the features they can deliver. It does not mean that all so-called HDMI 2.1 ports are equal.

In practical terms, whatever they might be called, there really are going to be HDMI 2.0 and 2.1 ports in circulation, with all the differences in supported features these differences entail. So calling them all the same thing looks set to make an already ridiculously confusing situation even more confusing.

Making the right connection

To get a fuller idea of just how unhelpful this is, let’s start by looking back at our established relationship with and understanding of AV connectivity, and the evolution of the HDMI port.

Once upon a time you used to know exactly what a particular connection on your TV could do for you. Composite video inputs gave you crappy composite video feeds. S-Video feeds always gave you cleaner video feeds than composite video. Component video could give you purer colours than S-Video and even – whoot – HD video support. And if a Scart was there, it would usually be able to do lovely RGB Scarty stuff. Albeit only in SD.

The arrival of digital video, though, and the HDMI connector introduced to carry it, made things simpler in one way but a whole lot less straightforward in many others. With HDMI you’ve got a connection that as if by magic can keep evolving to take on new video and sound-carrying properties without having to change its essential design.

From the early HD, SDR days of HDMI, for instance, we now have HDMI connections that can handle 8K resolutions at 60Hz refresh rates, 4K with 120Hz refresh rates, all sorts of high dynamic range formats (HDR), variable refresh rates (VRR), automatic low latency mode switching (ALLM), lossless Dolby Atmos sound… Basically, HDMI has proved to be the ‘Mary Poppins’ bag’ of the connection world.

The latest hi-fi, home cinema and tech news, reviews, buying advice and deals, direct to your inbox.

While this sounds great on paper, though, its evolution over the past couple of decades (yes, it really has been around for that long, and you really are that old) has been an increasingly complicated mess that has consistently caused confusion for consumers and a depressingly long list of issues for manufacturers. And the core reason for all this confusion has just been laid bare by the new ‘all current HDMI 2.0 ports will henceforth be called HDMI 2.1 ports’ proclamation.

The first thing to understand is that HDMI’s long evolution has seen it accompanied by various version numbers, introduced to mark moments of major advancement. So we’ve had, for instance, HDMI 1.4, HDMI 1.4b, HDMI 2.0 and the latest HDMI 2.1 ‘variant’.

This weird mix of decimal and lettered labelling systems over the years already feels more complicated than it needed to be. But what’s really kicked the confusion into overdrive with the move from HDMI 2.0 to 2.1 is the latter’s support for now key next-gen gaming features that 2.0 can’t manage.

Next-gen gaming features

While you could get lost for days in some of the technical differences between HDMI 2.0 and 2.1 (something we’ll be coming back to later), the really key stuff for most consumers now relates to gaming. Specifically the ability or not of an HDMI port to handle the 4K at 120Hz outputs now possible from the Xbox Series X, PS5 and high-end PC cards, the variable refresh rates now supported by the Xbox Series X and latest PC cards (the PS5 is supposedly set to join the VRR party soon, too) and the automatic low latency mode that enables compatible TVs to detect when compatible sources (such as the Xbox Series X again) are playing a game rather than video source, so that the TV can switch into its fastest response mode accordingly.

Until now, the main problem for consumers trying to figure out exactly what features a particular TV can or can’t support has been that while a true HDMI 2.1 port capable of supporting bit-rates of 40 or 48Gbps per second is needed to enjoy all of the latest gaming features (along with the ability to pass Dolby Atmos and DTS:X soundtracks via eARC technology), much lower bandwidth HDMI 2.0 ports could claim HDMI 2.1 support even if they supported just one or two of the HDMI 2.1-associated features – typically eARC and ALLM. For instance, many TVs with HDMI 2.0 ports have in the past supported eARC, even though eARC was introduced with the HDMI 2.1 spec.

This complication has simply been compounded by the decision to allow brands to say that HDMI 2.0 ports are, in fact, HDMI 2.1 ports, even if they don’t necessarily support any HDMI 2.1 features.

Making you work for it

So why has the HDMI Licensing Administrator done it? The most likely argument is that product manufacturers are supposed to make information about each product’s HDMI features obvious in their specifications, while we consumers need to rethink our old ideas about different connections being needed to achieve different results and see HDMI as effectively a menu from which different features can be selected by a particular product manufacturer for a particular product. In other words, we must accept that we need to put in more research ourselves to confirm what an ‘HDMI 2.1’ product actually can and can’t support.

Aside from making the consumer work harder, the need for extra information sharing and gathering created by rolling two HDMI generations into one still doesn’t perhaps sound too onerous. Maybe it actually is better for consumers to be able to see on a TV’s spec sheet that it supports 4K/120Hz, or VRR, or ALLM, rather than just seeing what generation of HDMI it carries and having to figure out from that what gaming features it likely supports.

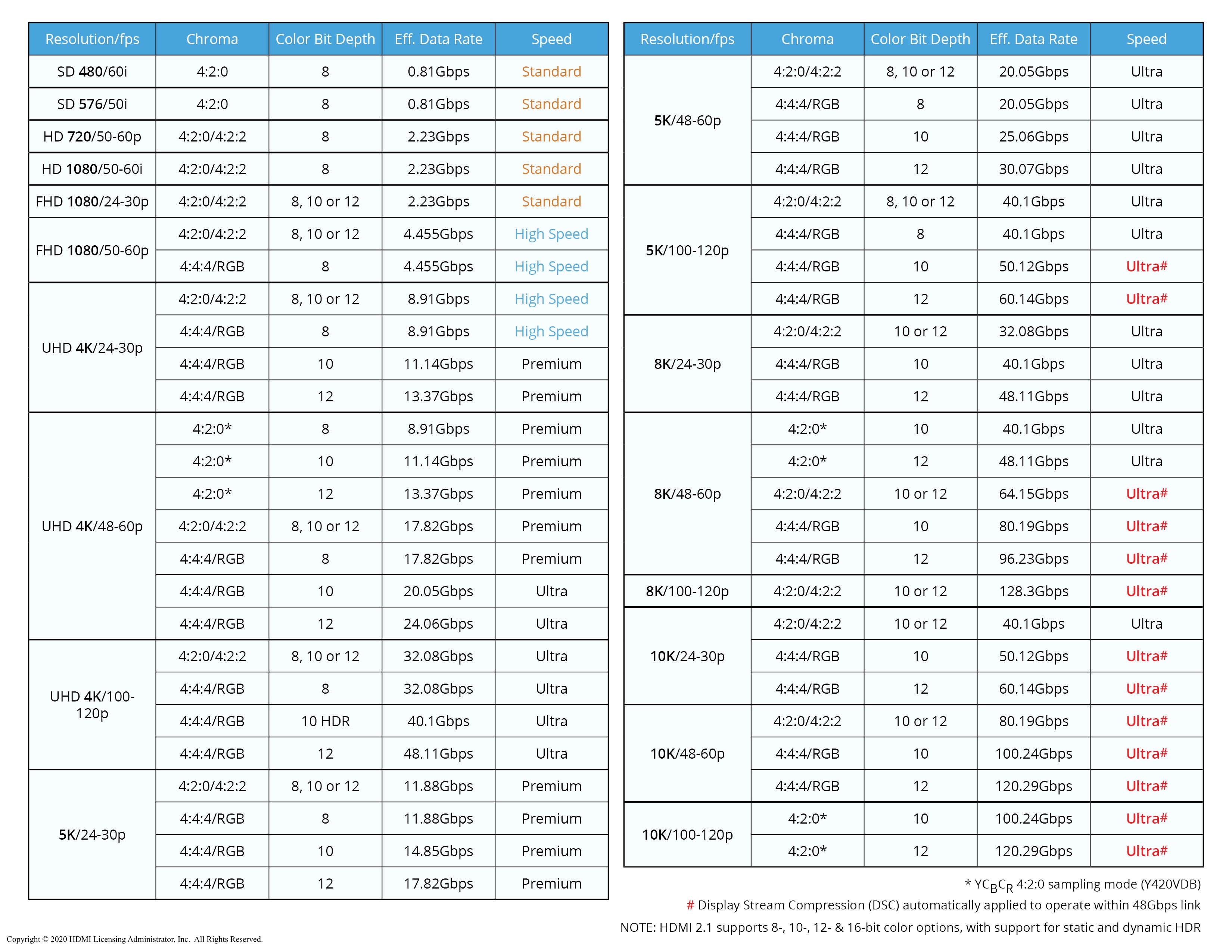

There are actually two big problems with this, though. First, the 4K/120Hz, ALLM and VRR features associated with the latest gaming consoles are actually only the tip of a far more complicated and convoluted iceberg when it comes to the potential final quality of the experience you might get. Other factors such as compression systems, bit depths and chroma subsampling are also in play – all a factor, fundamentally, of the many data transmission rates different HDMI implementations can support. Check out the table of HDMI capabilities below to see just how complex everything can get (you might need to zoom in – that's how bad it is).

Clearly expecting most consumers to understand the full implications of all the HDMI intricacies we have today is wholly unreasonable. In fact, even top manufacturers have fallen foul of it, leading to a number of serious HDMI connection screw ups where products as diverse as consoles, AVRs, soundbars and TVs have run foul of compatibility issues with other devices they thought ahead of launch they would be compatible with.

The biggest problem with the whole HDMI situation now, though, and that thing that’s most exposed by the new ‘everything can say it’s HDMI 2.1’ move, is enforcement. There really hasn’t been any attempt by the HDMI Licensing Association or any other consumer technology body to regulate how, or even how honestly, products’ HDMI features are explained to consumers.

While we couldn’t say for certain that brands have deliberately used this lack of ‘policing’ to deliberately mislead consumers, it seems pretty reasonable to assume that brands might not be keen on advertising HDMI-related features a product might NOT have unless compelled to do so. And in these circumstances, consumers not having even the ability to know at a glance any more where a TV is using an HDMI 2.0 port with a limiting maximum bandwidth of 18Gbps or an HDMI 2.1 port with a potential maximum bandwidth of 48Gbps is a big deal.

In the end, the recent decision to supposedly ‘simplify’ an HDMI numbering system that if anything could/should have been used more (or at least more effectively) over the years to define very specific levels of functionality actually has exactly the opposite effect – leaving consumers and potentially also manufacturers with even less idea about what’s going on than they had before.

MORE:

This is what HDMI 2.1 meant before all this nonsense: HDMI 2.1 explained

Everything you need to know about 4K/120, VRR and ALLM

Here's our rundown of the best gaming TVs for next-gen gaming

John Archer has written about TVs, projectors and other AV gear for, terrifyingly, nearly 30 years. Having started out with a brief but fun stint at Amiga Action magazine and then another brief, rather less fun stint working for Hansard in the Houses Of Parliament, he finally got into writing about AV kit properly at What Video and Home Cinema Choice magazines, eventually becoming Deputy Editor at the latter, before going freelance. As a freelancer John has covered AV technology for just about every tech magazine and website going, including Forbes, T3, TechRadar and Trusted Reviews. When not testing AV gear, John can usually be found gaming far more than is healthy for a middle-aged man, or at the gym trying and failing to make up for the amount of time he spends staring at screens.